Unity Hackweek XR Project

Prototyping two-handed interactions in XR

Please note that this project was created as a proof of concept to explore the XR space and in the future, Unity has no obligation to implement any of the features described.

While interning at Unity, I had the opportunity to participate in Hackweek, the company's internal week-long hackathon, and collaborate with a team of developers to prototype two-handed interactions in XR (extended reality, otherwise known as virtual or augmented reality). We created a variety of C# scripts for two-handed interactions and some demos which utilize these features in Unity. These could be bundled with Unity's XR Interaction Toolkit so developers can use it to easily create natural-feeling two-handed interactions.

Timeline – 1 week in Jun 2020

Role – Developer in team of 12

Skills – XR design and development, Interaction design, Prototyping, Product thinking

Quickstart

Running the project

- Clone the repository

- Open the project in Unity version 2020.3 or later

- Connect your headset to your computer

- If you have a PC, press play to enter the scene; If you have a Mac, build the project and deploy it to your headset

Alternatively, you can download the .apk here and side load it.

Jump to the final result.

Context

Problem

Currently, the Unity XR Interaction Toolkit only natively supports one-handed interactions, and it's difficult to create custom two-handed interactions as a new developer that feels smooth.

When searching Youtube, you can find a ton of tutorials on how to create two-handed interactions in Unity using the XR Interaction Toolkit, however, there is currently no standardized way of implementing it, sometimes the interaction feels jittery and unnatural, and the package is constantly being updated so tutorials can become outdated quickly.

Goal

How might we make implementing natural-feeling, two-handed interactions in XR easier for developers?

Our goal was to create a collection of scripts that could be used by developers to easily implement a variety of two-handed interactions in XR. These scripts could be included in the Unity XR Interaction Toolkit and swapped in and out as needed. Another goal was to use the scripts created in a series of demo stations to show how they might be used.

Solution

Final result

We created 9+ scripts that developers can use in their projects to easily implement two-handed interactions. Some of these scripts include:

DynamicAttachwhich allows attach points anywhere along the interactable's collidersSymmetricalAttachwhich works well for objects like gardening scissorsXRMultiGrabInteractablewhich allows an interactable to be grabbed more than onceXRMultiGrabStabilizedInteractablewhich inherits fromXRMultiGrabInteractableand allows the interaction to be more fine-tuned and stable for precise interactionsXRTwoHandGrabAndTwistwhich allows for an object to be grabbed and twisted easily

You can check out the Github repository for the project here.

The video below is a compilation of all the demos we made using the scripts:

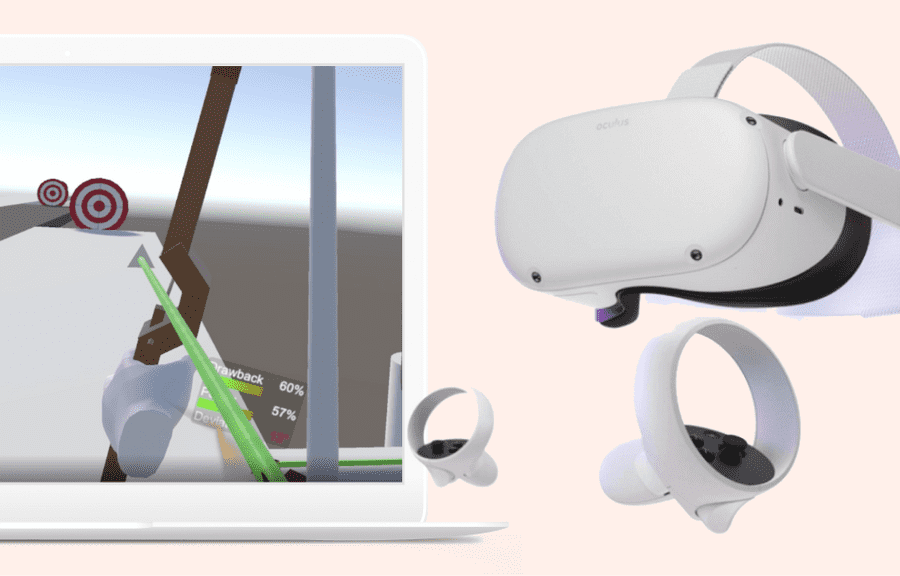

Personally, I worked on creating a bow and arrow interaction, as well as a hockey stick interaction. I also worked on creating the animated hands to simulate grabbing and trigger interactions.

Reflecting back

Prior to this project, I had only tinkered with XR technologies on a few occasions, including working on a small Hololens project at Microsoft and building a few simple apps with Unity and my Oculus Quest 2. I hadn't really touched the standardized scripts, but with this project I was able to learn a lot more about script design and working in a large team on a 3D project.

I had a lot of fun working with the team and everyone was really supportive and willing to help! I definitely learned a lot and hope to use some of these scripts myself in my projects.